AI Governance in Healthcare: How To Ensure a Safe, Ethical, and Effective AI Implementation for Your Organization

Discover how AI governance helps healthcare organizations reduce risks like bias, errors, and data breaches while improving trust and compliance

September 2, 2025

Key Takeaways:

• AI in healthcare is growing fast, but most organizations lack formal governance to ensure safety, fairness, and compliance.

• Strong AI governance frameworks help manage risks like bias, hallucinations, and data privacy while keeping clinicians in control.

• Choosing the right vendor means demanding explainability, clear oversight processes, and proof of real-world performance.

• Continuous monitoring, workforce readiness, and integration into existing workflows are what turn AI from risky pilots into trusted, ROI-positive tools.

• Human-in-the-loop design is essential, as AI should augment clinicians, not replace their judgment.

If you’ve ever found conflicting headlines about “AI transforming healthcare” or “AI gone wrong in medicine,” you’re not alone. In healthcare, the promise of AI is monumental, but so are the risks.

According to the AMA 2025 Survey, 66% of physicians reported using AI in their practices, compared to just 38% in 2023. Despite this rapid uptake, only less than a fifth have formal AI governance frameworks to ensure these tools are safe, fair, and effective.

In this guide, you’ll get a straightforward breakdown of AI governance in healthcare—what it is, why it matters, and how it helps manage risks like bias, hallucinations, and data privacy. We’ll walk through best practices for building governance structures to help you kick off AI implementation in your healthcare organization.

What is AI Governance in Healthcare and Why Is It Important?

AI governance in healthcare is a set of structures, policies, checks, and oversights that make sure AI tools are safe, fair, and do what they’re supposed to do. It’s about giving hospitals, doctors, and patients confidence that the AI helping make decisions isn’t biased, won’t make harmful mistakes, and keeps sensitive data secure.

But why does AI governance matter? That’s because no AI is perfect. It can make mistakes that can lead to misdiagnoses or biased treatment decisions, putting patients or health systems at risk.

The AMA’s Governance for Augmented Intelligence report lays it out clearly that strong AI governance in healthcare helps organizations:

- Confidently identify and roll out AI tools that fit their needs

- Create standardized ways to evaluate and reduce risks before harm happens

- Keep detailed records that boost transparency and accountability

- Provide continuous oversight to catch and fix issues early

- Ease clinician workload by streamlining administrative tasks

- Foster teamwork and alignment across departments for consistent, safe AI use

How is AI Currently Being Used In healthcare?

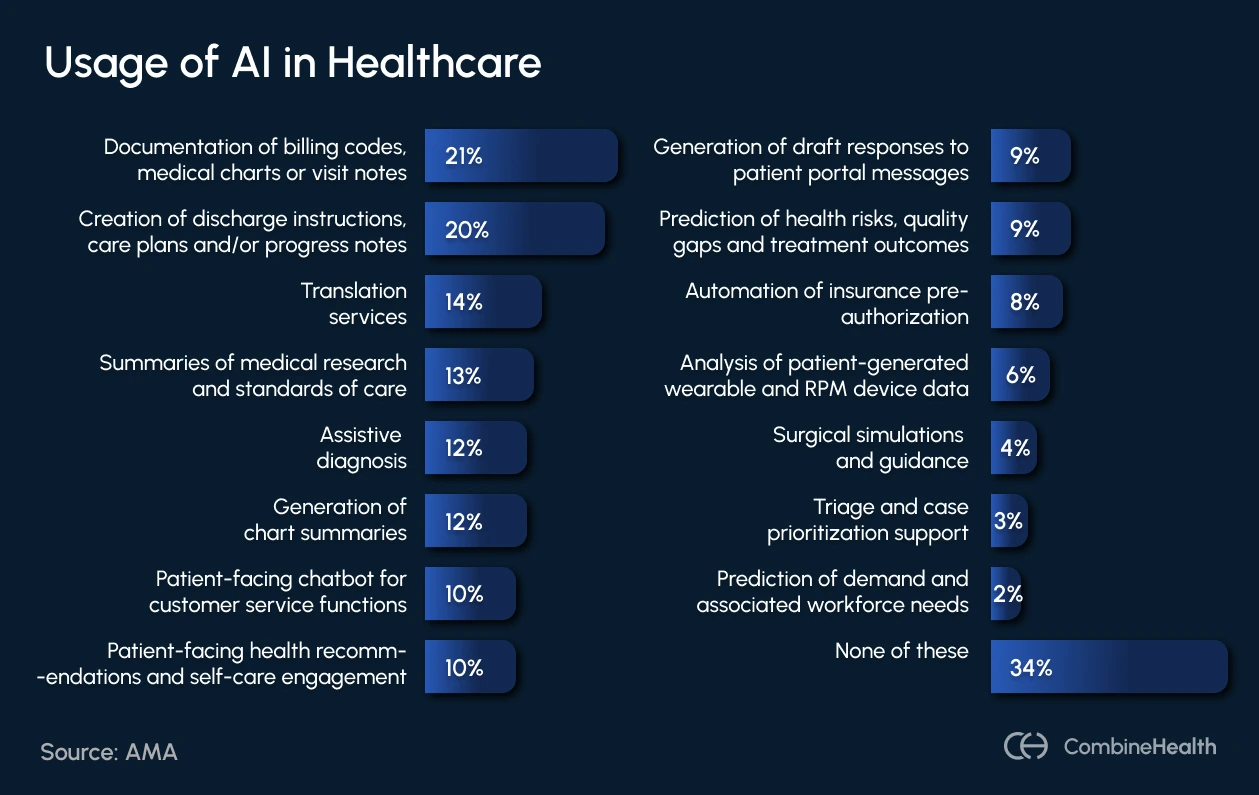

Healthcare organizations are already experimenting with AI across a wide spectrum of tasks. But the diversity of use cases also underscores why governance matters: without clear standards for oversight, transparency, and accountability, these tools risk becoming fragmented pilots instead of delivering safe, system-wide impact.

Here’s a breakdown of AI use cases in healthcare by AMA:

What Challenges Can AI Governance Help Address?

Here are some of the biggest challenges that arise with the usage of AI in healthcare organizations:

Bias in AI Models

AI tools learn from existing data, and if that data contains any biases (whether related to race, gender, or socioeconomic status), those biases can be baked into the algorithms.

For example, algorithms trained on incomplete or unrepresentative data sets might underdiagnose certain groups or prioritize care unevenly.

A notable case involved an AI that evaluated health needs based on healthcare spending, leading to people of color being assessed as healthier than similarly sick white patients simply because less money was spent on their care.

AI Hallucinations and Errors

AI systems, especially generative models, sometimes produce outputs that sound plausible but are factually incorrect or misleading (also known as “hallucinations”). In healthcare, this can mean incorrect diagnoses, inaccurate medical histories, or inappropriate treatment suggestions.

Data Privacy and Security Risks

Healthcare data is highly sensitive, and AI systems often require access to large volumes of patient information.

Without tight governance controls, AI systems can expose patient data, violate HIPAA rules, or become targets for cyberattacks.

Transparency and Explainability

Many AI systems blurt out answers, but clinicians can’t see how those answers were derived. That undermines both trust and regulatory compliance.

Example:

AI reviewing urgent care notes flags a patient as having strep throat instead of a seasonal allergy, but doesn’t explain the rationale.

Liability

Who’s on the hook when AI makes a bad call—the clinician using it, the hospital deploying it, or the vendor building it?

Latest Policies Around AI Governance

Below is an overview of the latest policies shaping how healthcare organizations are expected to adopt AI responsibly:

How To Ensure Your Vendor Follows the Best AI Governance Practices

Choosing an AI partner means evaluating not just their technology, but also how they handle transparency, compliance, and accountability. Here’s what to look for when assessing whether a vendor follows best AI governance practices:

1. Establish a Comprehensive AI Governance Framework

Every successful AI program in healthcare begins with a strong governance framework. This framework should clearly connect AI initiatives to your organization’s strategic goals, backed by strong leadership and the right mix of stakeholders.

You can start by forming an AI governance board or interdisciplinary working group—an army of leadership at the top, actually owning the AI strategy. This may include leaders from clinical care, nursing, compliance, IT, data science, legal, and even patient experience. And their role can be to:

- Standardize the intake process

- Vet vendor claims

- Transparency rules (like disclosing AI use to patients and clinicians)

- Draft AI usage policies, with clear definitions of what’s allowed and what’s not

- Define risk attribution between the health system and the AI vendor

- Training requirements

Beyond review processes, healthcare organizations need to revisit and update their policies to incorporate anti-discrimination, codes of conduct, consent forms, and even staff training.

Also read: Tough question to ask while evaluating an AI RCM vendor

2. Conduct Rigorous Vendor Evaluation and Contracting

Choosing the right AI vendor shouldn’t be just about the latest features or a slick demo. What you really need is to evaluate a vendor in terms of trust, accountability, and long-term safety. This means building a structured evaluation process backed by strong contractual protections.

A good first step is asking vendors to complete a detailed intake form, which should include details like:

- A clear description of the AI model and how it works

- Validation studies and real-world performance data

- Details on training data, including demographic representation

- Data privacy and security protocols

- Interoperability with your existing systems

- Plans for ongoing support, maintenance, and updates

- Cost and licensing structure

- Company background and compliance with federal and state regulations

Once you’ve vetted the vendor, then comes the legal review process, which is just as important and must explicitly address:

- Liability and accountability if something goes wrong

- Compliance responsibilities (HIPAA, FDA, state laws)

- Data ownership and usage rights

- Risk mitigation strategies

- Intellectual property protections

3. Implement Robust Oversight and Continuous Monitoring

Once a tool is live, the real work begins: making sure it performs as promised, stays safe, and adapts as your environment changes.

Here’s what to do:

- Routine checks on output quality, algorithm performance, and patient safety impact

- Bias and ethics monitoring to spot unintended consequences early

- User feedback loops so frontline staff can raise concerns without friction

- Performance drift tracking, including:

- Data drift: when underlying patient data patterns change

- Concept drift: when clinical practice evolves and the AI no longer fits

According to AMA’s guidelines, the best way to manage this is by assigning a multi-disciplinary oversight team consisting of:

- A clinical champion who can assess safety and workflow impact

- A data scientist or statistician who understands the model inside out

- An administrative leader who keeps quality and compliance in view

Equally important: Define clear escalation pathways

If unsafe or biased outputs appear, staff should know exactly how to report them—and vendors should be held accountable for timely fixes.

4. Foster Workforce Readiness and Seamless Workflow Integration

AI in healthcare or any other environment can succeed if it fits, i.e., teams should be open to using it without feeling overwhelmed. Tools that add extra clicks, extra screens, or extra mental overhead don’t last long in a clinical environment.

That’s why integration matters as much as innovation. The best AI systems act like silent assistants—working in the background, pulling data directly from the EHR, and surfacing insights only when they’re needed.

But there’s one non-negotiable condition: clinicians stay in control. This means every output should be editable, and every decision overrideable.

Technology alone won’t close the gap. The U.S. Department of Health and Human Services has emphasized that training and education programs must be part of every rollout for building an AI-empowered workforce. Staff must understand:

- What an AI can do

- What its limitations are

- How to question outputs

- How to escalate concerns when something doesn’t look right

And it helps with workforce retention, too. AI that handles labor-intensive tasks (like ambient clinical documentation) frees clinicians from endless paperwork, reduces burnout, and gives them more face time with patients.

Build Trust by Keeping AI Accountable

Without oversight, even the most promising AI models can quietly drift—shifting behavior, losing accuracy, or veering into “laziness” without anyone noticing.

Take GPT-4, for example. Late last year, developers joked about it growing “lazy” with coding tasks. Since these models are closed-source, we can’t peek under the hood. This means errors only come to light when communities catch them through experience and comparison.

It underlines a core truth: you can’t fix what you don’t measure!

That’s where structured observability models come in. It enables automation of evaluation, regression testing, root-cause analysis, and dataset enrichment, all in real-time.

At CombineHealth, we follow this principle closely. With continuous evaluation, observability, and governance baked into our processes, we:

- Actively monitor prompt drift

- Use leaderboard-style benchmarks to catch regressions early

- Iterate promptly

It’s how we ensure that while the foundational LLMs may waver, your application remains stable, predictable, and, most importantly, trustworthy.

Want to learn more about our AI governance processes? Let’s chat!

Related Posts

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Subscribe to newsletter - The RCM Pulse

Trusted by 200+ experts. Subscribe for curated AI and RCM insights delivered to your inbox

Let’s work together and help you get paid

Book a call with our experts and we'll show you exactly how our AI works and what ROI you can expect in your revenue cycle.

Email: info@combinehealth.ai

.webp)